Structured Response from LLMs

After Henshu

Large Language models (LLMs) have revolutionized the way we interact with unstructured text data. They can search for specific information, summarize key points, and even answer straightforward yes-or-no questions with corresponding explanations.

However, for we developers, the outputs generated by LLMs can be cumbersome to handle. These models can certainly generate a paragraph based on our requirements, but the data is unstructured, posing challenges for us who prefer structured data. Instead of presenting users with the raw output from the LLM, we desire the flexibility of structured data.

Making LLMs Generate Structured Data

Function calling is a novel feature offered on gpt-3.5-turbo-0613 and gpt-4-0613 by OpenAI. It enables the LLM to execute a predefined function allowing the LLM request API calls and consume the returned response. For instance, the following function can be provided to the Chat Completions API:

get_current_weather(location: string,unit:'celsius'|'fahrenheit')Upon prompting the LLM with a query like "What is the weather like in London," GPT responds by calling the get_current_weather() function with the location set to "London". The output can then be processed to generate a response such as "It's 30 degrees Celsius in London." Impressive, isn't it?

Let's delve even deeper!

What if, instead of retrieving data for the LLM through function calls, we equip it with a function to log our desired actions? Let's assume we specify some parameters for the log entry output and instruct the LLM to log this information. You might be surprised to find that GPT will happily comply!

Thanks for reading mayt writes! Subscribe for free to receive new posts and support my work.

The Code in Action

Let's walk through a sentiment analysis example. Our aim is for GPT to identify the sentiment in an article, assign a sentiment score, and provide instances that reinforce the identified sentiment.

We can shape our structured response using the options available in the function's parameters. To log the sentiment analysis, we can detail the parameters as a function, as outlined below:

structuredResponseFn = {

"name": "logger",

"description": "The logger function logs takes a given text and provides the sentiment, a sentiment score, and provides supporting evidence from the text.",

"parameters": {

"type": "object",

"properties": {

"sentiment": {

"type": "string",

"description": "The overarching sentiment of the article.",

},

"sentimentScore": {

"type": "number",

"description": "A number between 0-100 describing the strength of the sentiment.",

},

"supportingEvidence": {

"type": "array",

"items": {

"type": "object",

"properties": {

"example": {

"type": "string",

"description": "An example of the sentiment in the text.",

},

"score": {

"type": "number",

"description": "A number between 0-100 describing the strength of the sentiment example.",

},

},

"required": ["example", "score"],

},

"description": "A sorted list by score of supporting evidence for the sentiment.",

},

},

"required": [

"sentiment",

"sentimentScore",

"supportingEvidence",

],

},

}Next, we'll use the following prompt for GPT, allowing it to utilize the function and generate a response based on sentiment analysis. Remember, GPT might not fill in all the details, so ensure to prompt it for all the return values you'd like it to respond with.

structuredResponseContent = f"""{article}

Log the sentiment of the article and provide the top 3 supporting evidences.

"""Here's the Python snippet that brings the two code pieces together:

def run_structured_response(structuredResponseContent, structuredResponseFn):

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo-0613",

messages=[{"role": "user", "content": content}],

functions=[structuredResponseFn],

temperature=0.1,

function_call="auto",

)

response_message = response["choices"][0]["message"]

if response_message.get("function_call"):

function_args = json.loads(response_message["function_call"]["arguments"])

print(function_args) # print out the structured responseExperimenting

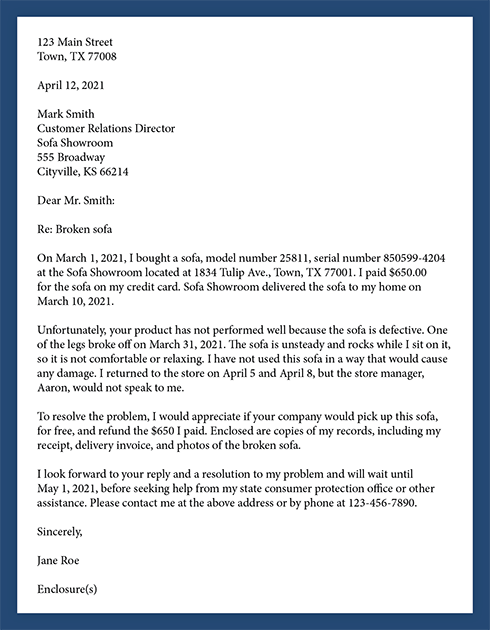

Let's put this function to the test using a sample customer complaint letter. As the letter is a complaint, we can anticipate a negative sentiment. Let's explore how well GPT performs.

Here is the output after invoking `run_structured_response`. We received a JSON object that reflects the sentiment type, a sentiment score, and the top 3 instances from the text that back up the sentiment.

{

"sentiment":"negative",

"sentimentScore":80,

"supportingEvidence":[

{

"example":"The sofa is defective.",

"score":90

},

{

"example":"One of the legs broke off on March 31, 2021.",

"score":85

},

{

"example":"The store manager, Aaron, would not speak to me.",

"score":75

}

]

}Before Henshu

LLMs have been a godsend for working with long text. You can ask it to find certain information. You can ask it to list out the key points. You can even ask it simple yes no questions with a follow up explanation.

However for programmers, LLM outputs are hard to work with. While we can get the LLMs to write out a paragraph about what we want, its unstructured. And programmers don’t like working with unstructured data. We don’t want to directly dump out the the LLM’s output to users. What we want is structured data.

Lets make LLMs return structured data

LLMs with function calling can be prompted to do an action and return structured data.

Function calling is a new feature available on gpt-3.5-turbo-0613 and gpt-4-0613 on OpenAI that allows the LLM to call a pre-described function. For example, we can give the following function to the Chat Completions API.

get_current_weather(location: string, unit: 'celsius' | 'fahrenheit')Then prompting the LLM with “What is the weather like in London,” GPT will return with a response to call the get_current_weather() function with the location set to “london.” Read that output and generate a response that say something like “It’s 30 degrees celsius in London.” Pretty neat!

Let’s go one level meta!

What if instead of having it call functions to get data back for the LLM, we gave it a function to log out what it wants to do? Lets say we define what the log entry output should have some given params, and prompt the LLM to log that? Surprisingly it will happily do that!

Show me the code

Let’s work though an sentiment analysis example. We want GPT to output the sentiment from an article, give a score for the sentiment, and give some examples that bolster the given sentiment.

We can define our structured response using the function’s parameter options. To log the sentiment analysis, we can describe the parameters as a function described below:

structuredResponseFn = {

"name": "logger",

"description": "The logger function logs takes a given text and provides the sentiment, a sentiment score, and provides supporting evidence from the text.",

"parameters": {

"type": "object",

"properties": {

"sentiment": {

"type": "string",

"description": "The overarching sentiment of the article.",

},

"sentimentScore": {

"type": "number",

"description": "A number between 0-100 describing the strength of the sentiment.",

},

"supportingEvidence": {

"type": "array",

"items": {

"type": "object",

"properties": {

"example": {

"type": "string",

"description": "An example of the sentiment in the text.",

},

"score": {

"type": "number",

"description": "A number between 0-100 describing the strength of the sentiment example.",

},

},

"required": ["example", "score"],

},

"description": "A sorted list by score of supporting evidence for the sentiment.",

},

},

"required": [

"sentiment",

"sentimentScore",

"supportingEvidence",

],

},

}Next, we will use this prompt to GPT to use the function and generate a response related to the sentiment analysis. GPT will not necessary try to fill in all the details so be sure to prompt it for all the return values you want it to respond with.

structuredResponseContent = f"""{article}

Log the sentiment of the article and provide the top 3 supporting evidences.

"""Here is the python snippet to put the two code together.

def run_structured_response(structuredResponseContent, structuredResponseFn):

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo-0613",

messages=[{"role": "user", "content": content}],

functions=[structuredResponseFn],

temperature=0.1,

function_call="auto",

)

response_message = response["choices"][0]["message"]

if response_message.get("function_call"):

function_args = json.loads(response_message["function_call"]["arguments"])

print(function_args) # print out the structured responseTesting it out

Let test this function out on a sample customer complaint letter. Given this is a complaint, we can expect a negative sentiment. Let’s see how GPT performs.

This is the result from calling run_structured_response. We got a JSON that captures the sentiment type, a sentiment score, as well as the top 3 examples from the text that supports the claim.

{

"sentiment":"negative",

"sentimentScore":80,

"supportingEvidence":[

{

"example":"The sofa is defective.",

"score":90

},

{

"example":"One of the legs broke off on March 31, 2021.",

"score":85

},

{

"example":"The store manager, Aaron, would not speak to me.",

"score":75

}

]

}

HI

HELLO